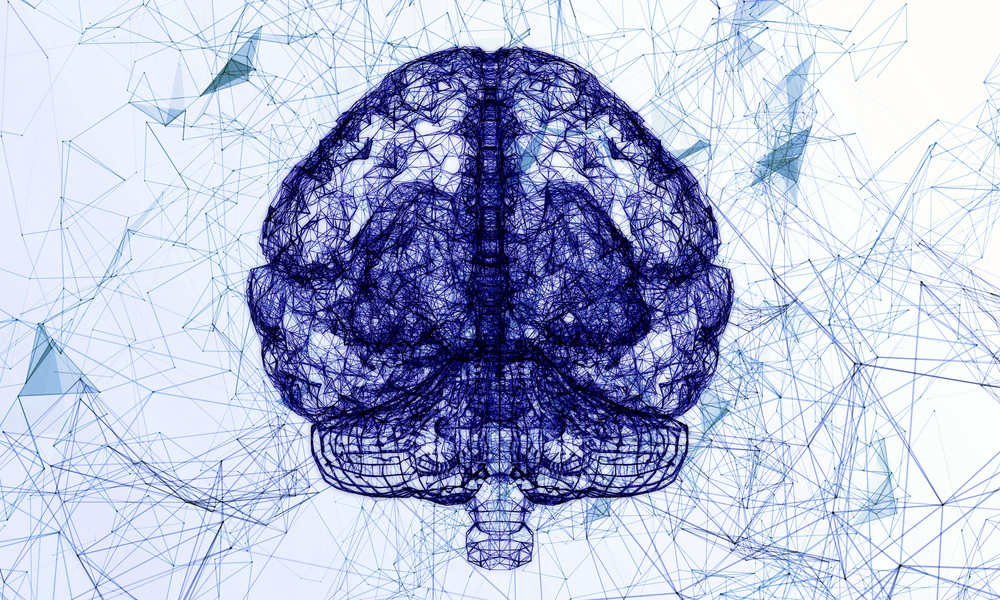

Stanford Researchers Aim to Speak ‘Brain’s Language’ to Heal Parkinson’s Disease

Researchers at Stanford University are hoping that brain-machine interfaces, or neural prosthetics, could become a clinical reality to help cure Parkinson’s disease.

The scientific interest in connecting the brain with machines and devices isn’t new. It began in the 1970s with Jacques Vidal’s Brain Computer Interface project. The project aimed to record electrical signals from the brain that would then be “read” and translated into some sort of action, such as playing a video game.

Today, brain-controlled “super-machines” are still within the realm of science fiction, but small prosthetic devices are not. Brain-machine interfaces already change how paralyzed people interact with the world. They have also restored elementary vision in people who have lost the ability to see, while preventing seizures and treating some Parkinson’s symptoms.

The biggest challenge these Stanford researchers identify, however, is understanding what the brain is “telling us” and how to “speak to it” in return. Already in 1970, Vidal anticipated that the biggest challenges would be taking the brain’s complex language of the brain, which is encoded in electrical and chemical signals sent between billions of neurons, and be able to translate it into something that a computer might understand.

Can this challenge be overcome?

Dr. Jaimie Henderson and Dr. Krishna Shenov, both researchers at Stanford, have worked on this question for 15 years. They managed to develop a device that, in a clinical study, gave paralyzed people a way to move a pointer on a computer screen and write messages. In similar studies, patients were able to move robotic arms using only signals from the brain.

They didn’t do this alone. Shenov and Henderson got help from many scientific advances like those of Dr. Paul Nuyujukian, who helped build and refine the software algorithms that translate neuronal signals into cursor movements. For Nuyujukian, the challenge was something like “listening to 100 people speaking 100 different languages all at once and then trying to find something, anything, in the resulting din one could correlate with a person’s intentions,” said a Stanford news story.

To overcome this challenge, Nuyujukian and his team started studying monkeys’ voices. The algorithm they created remains the backbone of the highest performing system to date, after Nuyujukian adapted those insights to people.

Another approach involves listening for times when the brain is “trying to say something wrong.”

NeuroPace uses electrodes implanted on the surface or deep inside the brain to listen for patterns of brain activity that precede an epileptic seizure. When it hears those patterns, the device creates soothing electrical pulses that prevent or minimize the seizure.

This technique, developed by Stanford researchers, could also improve deep-brain stimulation, a 30-year-old treatment for Parkinson’s. The problem today is that these brain stimulators are constantly on, causing side effects like tingling sensations and difficult speech.

The problem remains in extracting useful messages from the “background noise” of the brain’s billions of neurons. Helen Bronte-Stewart, another neurology researcher, attempted a different approach. Focusing on one of Parkinson’s most unsettling symptoms, “freezing of gait,” the team investigated whether the brain might be saying something unusual during these episodes.

They found that indeed, the brain seems to emit low-frequency brain waves that are less predictable in those who experienced freezing gait than in those who didn’t, as well as during freezing episodes compared with normal movement. A potential treatment approach could be well-timed brain stimulation while listening to those signs.

Neither Nuyujukian nor Bronte-Stewart’s approaches require researchers to “understand” brain language. So how important is learning how to speak it? Could the lesson be that learning that language and how our brains use it is unnecessary in the search for more effective prosthetics and treatments for neurological disease, as long as researchers can “recognize” it?

Dr. E.J. Chichilnisky believes this will only be sufficient for awhile. Working to restore sight to people with severely damaged retinas, Chichilnisky knows these approaches might be limited to simple arrays of identical neurons.

To fully learn how to communicate with the brain, Chichilnisky thinks the task will require listening exactly to what individual neurons “are saying” and not just if they are saying anything. For him, understanding neuronal communication is critical and brain-machine interfaces need to figure out, first, what types of neurons its electrodes are talking to and how to convert an image into a language those neurons understand. He believes that ultimately, this is the only way a prosthetic can improve how the brain interacts with the outside world.